04. Cache

- «Speed»: latency

- «Speed»: trhoughput

- + (hardware specific) «Speed»: granularity (e. g. reading from disk by sectors vs. reading from disk cache by bytes)

- Cache

- keeping an actual part of «slow» storage data on «fast» storage

Why cache < storage?

- Cost: energy, money

- Effectiveness (search among lesser data / decoding lesser address)

What is «actuality»? See Locality_of_reference

- Temporal locality (once referenced ⇒ can be referenced soon again)

- Spatial locality (once referenced ⇒ neighbours can be referenced soon)

(other: branches and equidistant references)

What to keep in cache:

- What was read from storage

+ certain amount of following data (e. g. disk read)

- + prediction voodoo (e. g. equidistant)

TODO What to throw out of cache:

- Random: easy implementation, but inefficient;

LFU (less frequently used): need counters and age meters, and search;

- LRU (less recently used): need age and search;

- FIFO: relatively easy, but ineffective

FIFO+LRU: needs direct queue modification, but no age/counters (newly arrived element appends (or moves) to te top of the queue

There always is worst case algorithm for every cache architecture.

- Cache hit

- access to cached element

- Cache miss

- access to non-cached element

We must know address (storage index) of cached element. If element is too small, caching is ineffective because of too much metadata:

| 00 | 10 04 00 00 | a0 78 4f 95 |

- 8 bits — age

- 30 bits — address

- 32 bits — cached word

38 bits of metadata over 32 bits of data.

Note: address is 30 bits instead of 32 because we need no trailing two bits, which are zero if we addressing a word (4 bytes) instead of byte.

So let's go further and cache a whole memory block:

| 00 | 10 04 00 | a0 78 4f 95 … 34 45|

- 8 bits — age

- 24 bits — address tag

- 8192 bits — cache line (256 words)

32 bits of metadata over 8192 bits of data.

- Tag

- part of memory address that corresponds to a memory block

- Line

- memory block that corresponds a tag

Caching a line of words at once (and probably by access to one word only) is statistically good tactics because of spatial locality

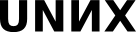

Direct cache

A line can cache one of selected list of blocks. If tags are equal, this is hit, if not equal or unused, this is miss (and cache line is filled).

- Tag size = number of concurrent lines bit length (address // cache size)

- Line number = cache size // line size

- (this is commonly used formula)

- Complies to spatial locality, but weak to locality breaches

- Has effective implementation

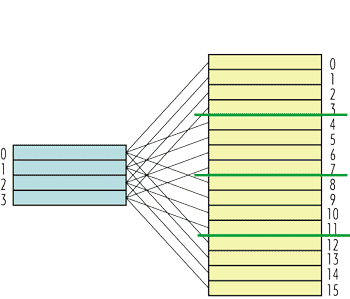

Associative cache

Any line can cache any memory block (aligned to cache line size).

- Tag size = number of blocks (address // line size ) ⇒ larger than in direct cache

- Line numbers are stored when cached

Needs hardware implementation of tag searching (we don't know where line is cached, if it is)

- ⇒ Effective if small

- Complies to temporal locality, strong to spatial locality breach

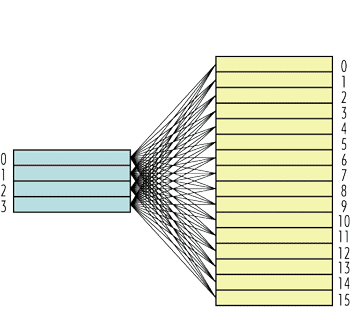

Multi-associative cache

How to violate spatial locality:

- Perform random access to various addresses (rarely accidental)

Have more than one spots of code/data flow (e. g. in multitasking environment, and that is common)

Multi-associative cache is superposition of direct and associative cache:

- Each bank works as direct cache

- ⇒ tag size is equal to direct cache tag size

- There are a number of banks, and data is searched among them in associated manner

- Depending on number of banks, search is relatively fast

- Can hold multiple locality spots

Write cache

- Increases performance

- Temporal locality ⇒ multiple write to cache leads to single writ to storage

Cache strategies:

- Write through: no write cache

- if already cached — need to update

- if cache is missed:

- do nothing (only reads are cached)

- or

- also cache data as if there was read (but this adds complexity and is unexpectedly ineffective)

- do nothing (only reads are cached)

- Write back: program continues after writing to cache, actual memory write is performed «at the background» somehow

- hot line: cache line that is no synchronized with memory

- temporal locality: keep hot lines in cache as long as possible

any other memory access, read or write, (e. g. DMA) must be

- restructured to work through cache

- or

- cause cache flush

- or

- invalidate corresponded cache line

- restructured to work through cache

- hot line: cache line that is no synchronized with memory